Minimalistic Logging Facade

cds.log (id?, options?)

Returns a logger identified by the given id.

const LOG = cds.log('sql')

LOG.info ('whatever', you, 'like...')Arguments

id?— the id for which a logger is requested — default:'cds'options?— alternative tolevelpass an options object with:level?— specify a string instead ofoptionsas a shorthand for{level}

// all following are equivalent...

const LOG = cds.log('foo', 'warn') //> shorthand for:

const LOG = cds.log('foo', { level: 'warn' })

// including case-insensitivity...

const LOG = cds.log('foo', 'WARN') //> shorthand for:

const LOG = cds.log('foo', { level: 'WARN' })Logger id — cached & shared loggers

The loggers constructed by cds.log() are cached internally, and the same instances are returned on subsequent invocations of cds.log() with the same id. This allows to use and share the same logger in different modules.

const LOG1 = cds.log('foo')

const LOG2 = cds.log('foo')

console.log (LOG1 === LOG2) //> trueLogger label — used to prefix log output

By default, each log output is prefixed with [<id>] -, for example, as in [cds] - server listening . Sometimes you may want to use different ids and labels. Use option label to do so as in this examples:

const LOG = cds.log('foo',{label:'bar'})

LOG.info("it's a foo")

//> [bar] - it's a fooLogger usage → much like console

Loggers returned by cds.log() look and behave very much like JavaScript's standard console object a log method for each log level:

cds.log() → {

trace(...), _trace,

debug(...), _debug,

info(...), _info, log(...), // alias for info()

warn(...), _warn,

error(...), _error,

}In addition, there is a boolean indicator to check which levels are active through corresponding underscored property, for example, LOG._debug is true if debug is enabled.

Recommendations

Leave formatting to the log functions — for example don't expensively construct debug messages, which aren't logged at all if debug is not switched on. For example:

js// DONT: const { format } = require('util') LOG.debug (`Expected ${arg} to be a string, but got: ${format(value)}`) // DO: LOG.debug ('Expected', arg, 'to be a string, but got', value)Check levels explicitly — to further minimize overhead you can check whether a log level is switched on using the boolean

Logger._<level>properties like so:jsconst LOG = cds.log('sql') LOG._info && LOG.info ('whatever', you, 'like...')

cds.log.format

Setting Formats for New Loggers

You can provide a custom log formatter function by setting cds.log.format programmatically as shown below, for example in your custom server.js.

// the current default:

cds.log.format = (id, level, ...args) => [ `[${id}]`, '-', ...args ]// a verbose format:

const _levels = [ 'SILENT', 'ERROR', 'WARN', 'INFO', 'DEBUG', 'TRACE' ]

cds.log.format = (id, level, ...args) => [

'[', (new Date).toISOString(),

'|', _levels[level].padEnd(5),

'|', cds.context?.tenant || '-',

'|', cds.context?.id || '-',

'|', id, '] -', ...args

]Formatter functions are expected to return an array of arguments, which are passed to the logger functions — same as the arguments for console.log().

Setting Formats for Existing Loggers

You can also change the format used by newly or formerly constructed loggers using .setFormat() function:

const _levels = [ 'SILENT', 'ERROR', 'WARN', 'INFO', 'DEBUG', 'TRACE' ]

const LOG = cds.log('foo') .setFormat ((id, level, ...args) => [

'[', (new Date).toISOString(),

'|', _levels[level].padEnd(5),

'|', cds.context?.tenant || '-',

'|', cds.context?.id || '-',

'|', id, '] -', ...args

])cds.log.levels

Constants of supported log levels:

cds.log.levels = {

SILENT: 0, // all log output switched off

ERROR: 1, // logs errors only

WARN: 2, // logs errors and warnings only

INFO: 3, // logs errors, warnings and general infos

DEBUG: 4, // logs errors, warnings, info, and debug

// (and trace when using default logger implementation)

TRACE: 5, // most detailed log level

SILLY: 5, // alias for TRACE

VERBOSE: 5 // alias for TRACE

}You can use these constants when constructing loggers, for example:

const LOG = cds.log('foo', cds.log.levels.WARN)Configuring Log Levels

Configure initial log-levels per module through cds.log.levels, for example like that in your package.json:

{

"cds": {

"log": {

"levels": {

"sql": "debug",

"cds": "info"

}

}

}

}See pre-defined module names below.

Programmatically Set Log Levels

You can specify a default log level to use when constructing a logger as shown above. When called subsequently with a different log level, the cached and shared logger's log level will be changed dynamically. For example:

// some-module.js

const LOG = cds.log('foo') // using default log level 'info'// some-other-module.js

const LOG = cds.log('foo') // shares the same logger as above// some-controller-module.js

cds.log('foo','debug') // switches the 'foo' logger to 'debug' levelLog Levels as Used by the CAP Node.js Runtime

The CAP Node.js runtime uses the following guidelines with regards to which log level to use in which situation:

error: Something went horribly wrong and it's unclear what to do (that is, an unexpected error).warn: Something off the happy trail happened, but it can be handled (that is, an expected error).info: Brief information about what is currently happening.debug: Detailed information about what is currently happening.trace/silly/verbose(not used by the CAP Node.js runtime): Exhaustive information about what is currently happening.

cds.log.Logger

Constructs a new logger with the method signature of { trace, debug, log, info, warn, error } (cf. console). The default implementation maps each method to the equivalent methods of console.

You can assign different implementations by exchanging the factory with your own, for example, in order to integrate advanced logging frameworks such as winston.

Arguments

label— the log label to use with each log output, if applicablelevel— the log level to enable → 0=off, 1=error, 2=warn, 3=info, 4=debug, 5=trace

Using winston Loggers

Prerequisites: You need to add winston to your project:

npm add winstonBeing designed as a simple log facade, cds.log can be easily integrated with advanced logging frameworks such as winston. For example, using the built-in convenience method cds.log.winstonLogger() in your project's server.js like that:

cds.log.Logger = cds.log.winstonLogger()You can specify winston custom options to that method as documented for winston.createLogger(), for example like that:

cds.log.Logger = cds.log.winstonLogger({

format: winston.format.simple(),

transports: [

new winston.transports.Console(),

new winston.transports.File({

filename: 'errors.log',

level: 'error'

})

],

})Custom Loggers

Custom loggers basically have to return an object fulfilling the console-like cds.log loggers API as in this example:

const winston = require("winston")

const util = require('util')

const cds = require('@sap/cds')

cds.log.Logger = (label, level) => {

// construct winston logger

const logger = winston.createLogger({

levels: cds.log.levels, // use cds.log's levels

level: Object.keys(cds.log.levels)[level],

transports: [new winston.transports.Console()],

})

// winston's log methods expect single message strings

const _fmt = (args) => util.formatWithOptions(

{colors:false}, `[${label}] -`, ...args

)

// map to cds.log's API

return Object.assign (logger, {

trace: (...args) => logger.TRACE (_fmt(args)),

debug: (...args) => logger.DEBUG (_fmt(args)),

log: (...args) => logger.INFO (_fmt(args)),

info: (...args) => logger.INFO (_fmt(args)),

warn: (...args) => logger.WARN (_fmt(args)),

error: (...args) => logger.ERROR (_fmt(args)),

})

}Actually, the above is essentially the implementation of cds.log.winstonLogger().

DEBUG env variable

Use env variable DEBUG to quickly switch on debug output from command line like that:

DEBUG=app,sql cds watch

DEBUG=all cds watchValues can be

- comma-separated list of logger ids, or

- the value

allto switch on all debug output.

Matching multiple values of DEBUG

When obtaining loggers with cds.log() you can specify alternate ids that will all be matched against the entries of the DEBUG env variable; for example:

const LOG = cds.log('db|sql')Will be debug-enabled by both, DEBUG=db, as well as DEBUG=sql ....

Note: The alternative ids specified after | have no impact on the unique logger ids. That is, the logger above will have the id 'db', while 'sql' will only be used for matching against DEBUG env variable.

Configuration

Configuration for cds.log() can be specified through cds.env.log, for example like that in your package.json:

{

"cds": {

"log": {

"levels": {

"sql": "debug",

"cds": "info"

}

}

}

}The following configuration options can be applied:

levels— configures log levels for logged modules. The keys refer to the loggers'id, the values are lower-case names of log levels.user— Specifytrueto log the user's ID (req.user.id) asremote_user(Kibana formatter only). Consider the data privacy implications! Default:false.sanitize_values— Specifyfalseto deactivate the default behavior of sanitizing payload data in debug logs in production. Default:true.

Common IDs

The runtime uses the same logger facade, that is cds.log(). For each component, it requires a separate logger. So projects can set different log levels for different components/layers. The following table lists the ids used to set the log levels:

| Component | Logger IDs(s) |

|---|---|

| Server and common output | cds |

| CLI output | cli |

| CDS build output | build |

| Application Service | app |

| Databases | db|sql |

| Messaging Service | messaging |

| Remote Service | remote |

| AuditLog Service | audit-log |

| OData Protocol Adapter | odata |

| REST Protocol Adapter | rest |

| GraphQL Protocol Adapter | graphql |

| Authentication | auth |

| Database Deployment | deploy |

| Multitenancy and Extensibility | mtx |

Logging in Development

During development, we want concise, human-readable output in the console, with clickable stack traces in case of errors. You should not be overloaded with information that is additionally obfuscated by a bad rendering. Hence, console.log(), that makes use of util.format() out of the box, with raw arguments is a good choice.

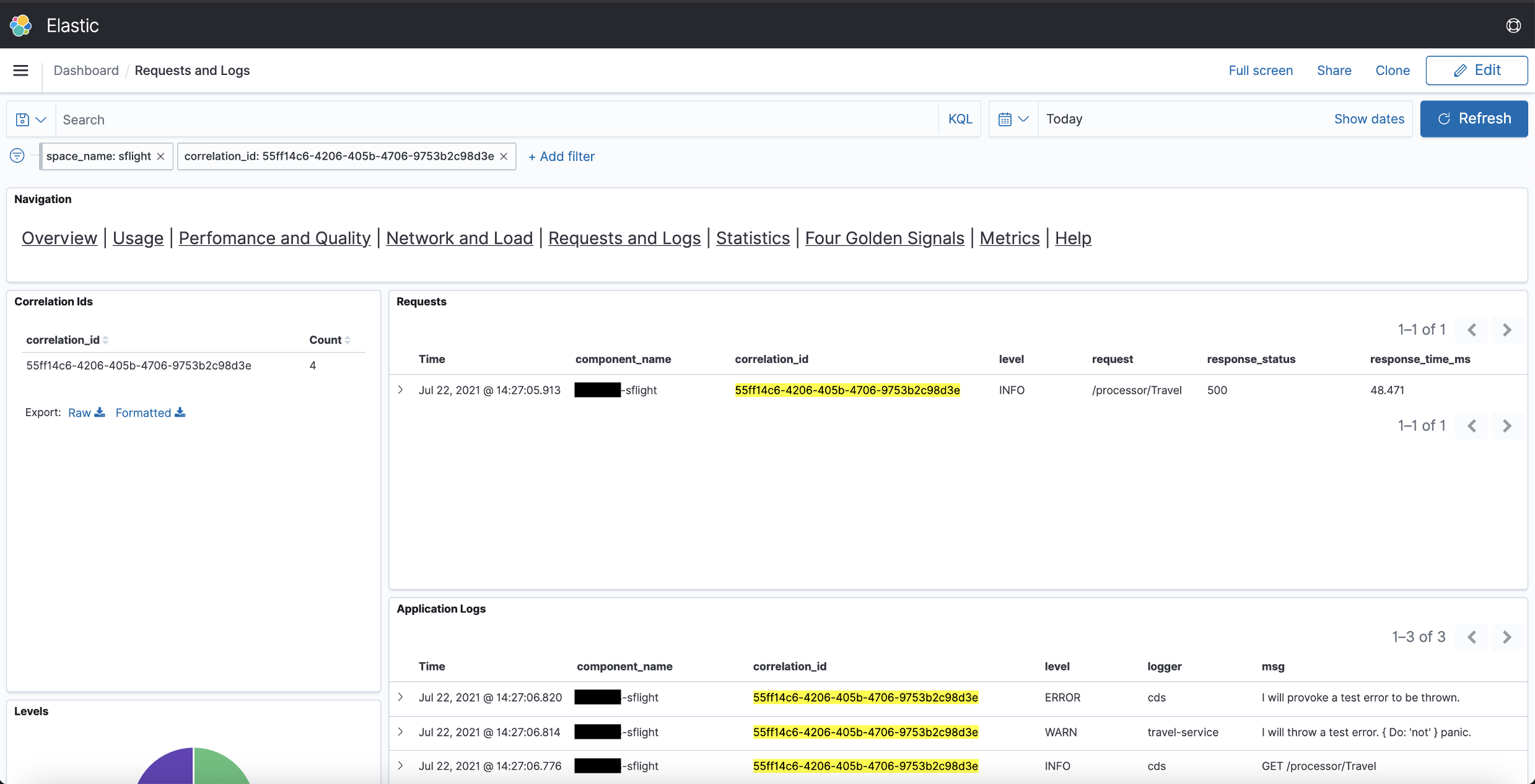

The plain log formatter, which is the default in non-production environments, prepends the list of arguments with [<module> -]. The following screenshot shows the log output for the previous warning and rejection with the plain log formatter.

The plain log formatter is the default formatter in non-production.

Logging in Production

SAP BTP offers two services, SAP Cloud Logging and SAP Application Logging Service, to which bound Cloud Foundry applications can stream logs. In both services, operators can access and analyze observability data, as described in Access and Analyze Observability Data for SAP Cloud Logging and Access and Analyze Application Logs, Container Metrics and Custom Metrics for SAP Application Logging Service. To get connected with either of those services, the application needs to be bound to the respective service instance(s) as described for SAP Cloud Logging and SAP Application Logging Service.

Additionally, the log output needs to be formatted in a way that enables the respective dashboard technology to optimally support the user, for example, filtering for logs of specific levels, modules, status, etc.

The JSON log formatter constructs a loggable object from the passed arguments as well as cds.context and the headers of the incoming request (if available).

The JSON log formatter is the default formatter in production.

TIP

Since @sap/cds 7.5, running cds add kibana-logging or setting cds.features.kibana_formatter: true are no longer needed. If you want to opt-out of the JSON formatter in production, set cds.log.format: plain.

Further, there are two formatting aspects that are activated automatically, if appropriate, and add the following information to the loggable object:

- Running on Cloud Foundry:

tenant_subdomain,CF_INSTANCE_IPand information fromVCAP_APPLICATION - Bound to an instance of the SAP Application Logging Service or SAP Cloud Logging:

categoriesand custom fields as described in Custom Fields

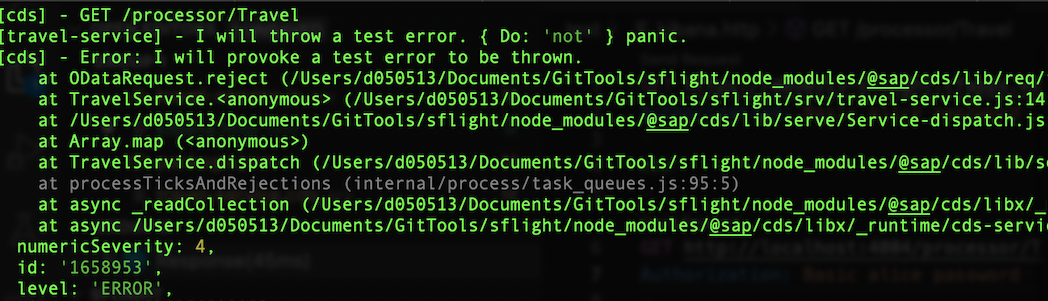

The following screenshot shows the log output for the rejection in the previous example with the JSON log formatter including the two aspects.

WARNING

The SAP Application Logging Service offers different plans with different quotas. Please make sure the plan you use is sufficient, that is, no logs are being dropped so that the information is available in Kibana. As soon as logs are dropped, you cannot reliably assess what is going on in your app.

Header Masking

Some header values shall not appear in logs, for example when pertaining to authorization. Configuration option cds.log.mask_headers allows to specify a list of matchers for which the header value shall be masked. Masked values are printed as ***. The default value is ["/authorization/i", "/cookie/i", "/cert/i", "/ssl/i"].

WARNING

In case your application shares any sensitive data (for example, secrets) via headers, please ensure that you adjust the configuration as necessary.

TIP

In the log entry, header field names are normalized to lowercase with _ instead of -. Make sure your matchers work on the original header name, for example, "/Foo-Bar/" instead of the normalized "/foo_bar/".

Custom Fields

Information that is not included in the list of supported fields of the SAP Application Logging Service can be shown as additional information. This information needs to be provided as custom fields.

By default, the JSON formatter uses the following custom fields configuration for SAP Application Logging Service:

{

"log": {

"als_custom_fields": {

// <key>: <index>

"query": 0, //> sql

"target": 1, "details": 2, //> generic validations

"reason": 3 //> errors

}

}

}Up to 20 such custom fields can be provided using this mechanism. The advantage of this approach is that the additional information can be indexed. Besides being a manual task, it has the drawback that the indexes should be kept stable.

Background

The SAP Application Logging Service requires the following formatting of custom field content inside the JSON object that is logged:

{

...,

'#cf': {

strings: [

{ k: '<key>', v: '<value>', i: <index> },

...

]

}

}That is, a generic collection of key-value-pairs that are treated as opaque strings.

The information is then rendered as follows:

custom.string.key0: <key>

custom.string.value0: <value>Hence, in order to analyze, for example, the SQL statements leading to errors, you'd need to look at field custom.string.value0 (given the default of cds.env.log.als_custom_fields).

In a more practical example, the log would look something like this:

msg: SQL Error: Unknown column "IDONTEXIST" in table "DUMMY"

...

custom.string.key0: query

custom.string.value0: SELECT IDONTEXIST FROM DUMMYWithout the additional custom field query and it's respective value, it would first be necessary to reproduce the issue locally to know what the faulty statement is.

For SAP Cloud Logging, the JSON formatter uses the following default configuration:

{

"log": {

"cls_custom_fields": [

"query", //> sql

"target", "details", //> generic validations

"reason" //> errors

]

}

}In order for the JSON formatter to detect the binding to SAP Cloud Logging via user-provided service, the user-provided service must have either tag cloud-logging or Cloud Logging. (For existing user-provided services, tags can be added via cf update-user-provided-service.)

The key-value pairs can either be part of the first argument or an exclusive object thereafter:

LOG.info({ message: 'foo', reason: 'bar' })

LOG.info('foo', { reason: 'bar' })As always, both defaults are overridable via cds.env.

Request Correlation

Unfortunately, there is no standard correlation ID header. x-correlation-id and x-request-id are the most commonly used, but SAP products often use x-correlationid (that is, without the second hyphen) and SAP BTP uses x-vcap-request-id when logging incoming requests.

As CAP aims to be platform independent, we check an array of headers (or generate a new ID if none hits) and ensure the value available at cds.context.id as well as req.headers['x-correlation-id']:

const { headers: h } = req

const id = h['x-correlation-id'] || h['x-correlationid']

|| h['x-request-id'] || h['x-vcap-request-id']

|| uuid()

if (!cds.context) cds.context = { id }

req.headers['x-correlation-id'] = cds.context.idSubsequently, the JSON log formatter (see Logging in Production) sets the following fields:

cds.context.id→correlation_id- Request header

x_vcap_request_id→request_id - Request header

traceparent(cf. W3C Trace Context) →w3c_traceparent

Specifically field w3c_traceparent is then used by both SAP Application Logging Service and SAP Cloud Logging to determine field trace_id in order to correlate requests, logs, and traces across multiple applications.

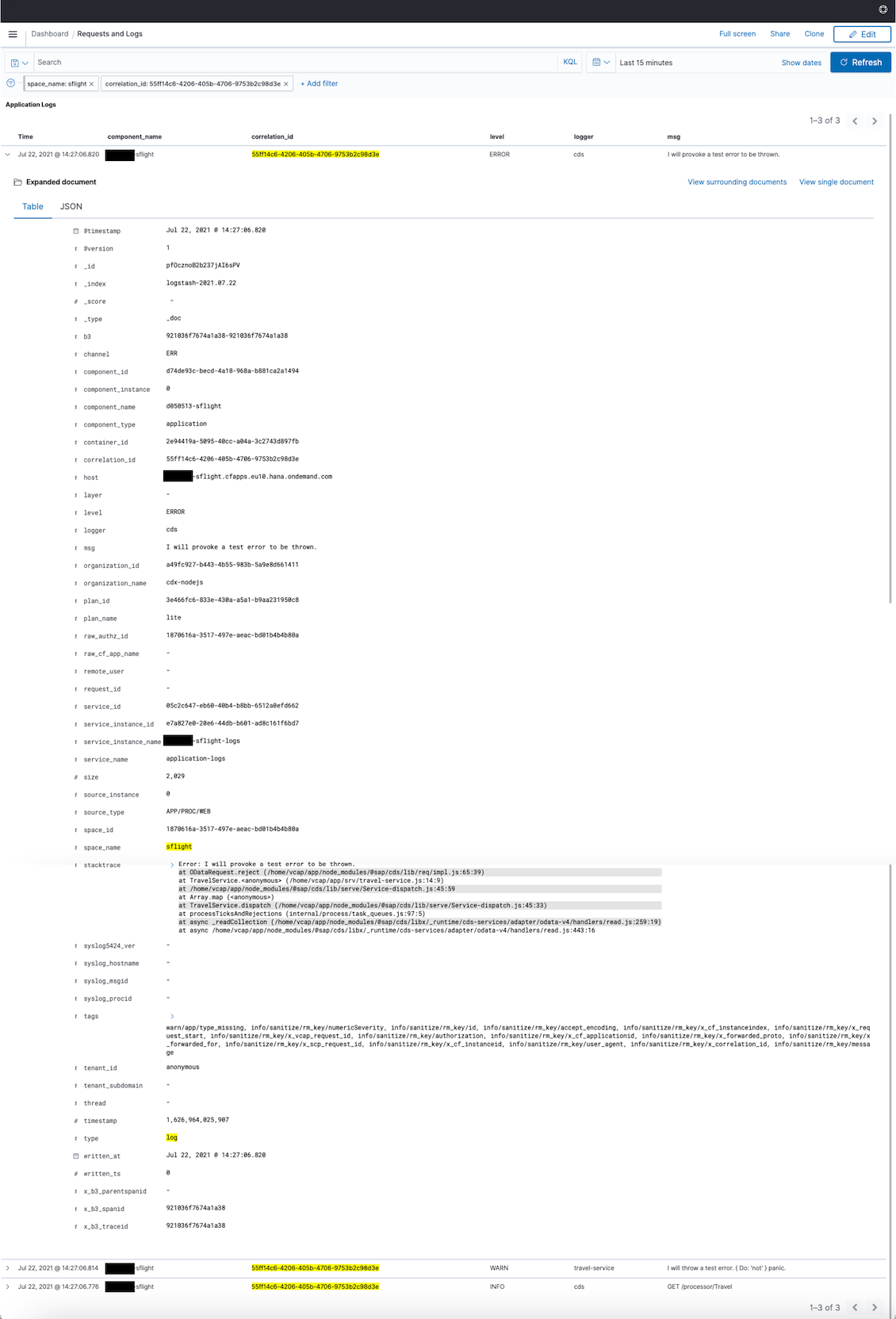

The following screenshot shows an example for log correlation based on field correlation_id in a log analytic dashboard of the SAP Application Logging Service for SAP BTP.